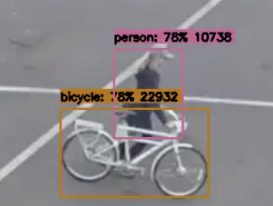

I was thinking of installing Scrypted on my Proxmox server to see what progress had been done since the last time I tested it about a year ago. From the documentation I saw that it could now use a Coral TPU for object detection. I have a Coral TPU installed on the server, and using it for object detection on Frigate NVR.

Naturally I was curious to know whether I could simultaneously use the TPU on 2 different LXC’s, seeing as it’s not really PCI passthrough, I thought it might be possible. As I usually do in these cases, I asked Google to find me the answer:

Google search:

proxmox use m.2 pci coral tpu on 2 different LXC simultaneously

Result:

Basically a useless search-result. In the mid 1990-ies I was actually working on a project for Google that involved evaluation of search results. I know for a fact that even then the Google search engine was better than the above result would indicate. It puzzles me why it recently seems the quality of Google searches are heading in the wrong direction.

I then had the idea that I would ask Google Gemini (could that have been Google’s intentions all along?)

On Google Gemini I typed:

I have a server running Proxmox. I have an m.2 pci Google Coral TPU installed. I have installed the drivers for it, and using it for a Frigate NVR installation on an LXC. If I install Scrypted NVR in another LXC, will I be able to access and use the Coral TPU simultaneously on Frigate and Scrypted?

Result:

Simultaneous Access to Google Coral TPU in Proxmox LXC Containers

1. Introduction

The Proxmox Virtual Environment (VE) is a widely adopted open-source server virtualization platform that allows users to manage virtual machines (VMs) and lightweight Linux containers (LXC) from a single web interface.1 LXC containers offer an efficient way to run isolated applications with minimal overhead by sharing the host kernel. In the realm of home automation and surveillance, Network Video Recorder (NVR) software like Frigate and Scrypted are popular choices for recording and analyzing video feeds from IP cameras.3 These applications often incorporate object detection functionalities to identify specific entities within the video streams. To accelerate the computationally intensive task of object detection, users frequently employ dedicated hardware accelerators such as the Google Coral Tensor Processing Unit (TPU).6 The Google Coral TPU is a purpose-built ASIC designed to significantly enhance the performance of machine learning inference, particularly for TensorFlow Lite models, and is available in various form factors, including m.2 PCI cards that can be installed directly into a server’s expansion slots.8 This report addresses the user’s specific query regarding the feasibility of simultaneously accessing and utilizing a single m.2 PCI Google Coral TPU installed in a Proxmox server by two separate LXC containers, one running Frigate NVR and the other running Scrypted NVR. The objective of this analysis is to provide a comprehensive, data-driven answer to this question, based on available research, and to offer relevant recommendations.

The user’s inquiry reflects a common scenario encountered by individuals managing self-hosted smart home systems. There is a natural inclination to optimize the utilization of hardware resources, especially specialized accelerators like the Google Coral TPU, across multiple applications running on the same infrastructure. The desire to leverage the power of the TPU for both Frigate and Scrypted within a Proxmox environment highlights a broader trend in the self-hosted community towards maximizing efficiency and performance in home automation setups. Furthermore, the choice of an m.2 PCI form factor for the Coral TPU suggests a preference for potentially higher performance and a more integrated hardware solution compared to alternatives like USB-based TPUs. However, this decision also introduces technical considerations related to how PCI devices are accessed and shared within a virtualized environment like Proxmox LXC, which might not be as straightforward as with USB devices.

2. Understanding PCI Device Access in Proxmox LXC Containers

Proxmox VE enables LXC containers to access hardware resources present on the host system, but the underlying mechanism differs significantly from the direct PCI passthrough employed for KVM-based virtual machines.1 In the context of VMs, true PCI passthrough involves dedicating an entire PCI device to the virtual machine, granting it exclusive and direct hardware access while making it unavailable to the host. In contrast, LXC containers, being lightweight and sharing the host kernel, access hardware through a mediated approach, primarily by interacting with device files that are created and managed by the host kernel.15 Therefore, when discussing “PCI passthrough” in the context of LXC, it more accurately refers to the process of granting the container access to these device files, allowing applications within the container to communicate with the underlying hardware through the host kernel and its drivers.

The typical procedure for enabling an LXC container to access a PCI device, such as the Google Coral TPU, involves several key steps. First and foremost, the appropriate drivers for the Google Coral TPU (e.g., gasket-dkms and libedgetpu1-std for PCIe/M.2 variants) must be installed on the Proxmox host operating system.3 Since LXC containers share the host kernel, the presence and proper functioning of these drivers at the host level are fundamental for the containers to interact with the TPU. Once the drivers are installed, the next step is to identify the device node associated with the Coral TPU. For PCIe and M.2 versions, this is typically represented by the device file /dev/apex_0. This device node can be identified on the host using commands like lspci -nn | grep 089a to confirm the presence of the Coral TPU as a PCI device and then ls -l /dev/apex* to verify the existence of the corresponding device file in the /dev directory.3

After identifying the device node, the LXC container needs to be explicitly configured to allow access to it. This configuration is typically done by modifying the container’s configuration file, located at /etc/pve/lxc/<VMID>.conf, where <VMID> is the unique numerical identifier of the LXC container. Two primary configuration keys are used for this purpose: lxc.cgroup2.devices.allow and lxc.mount.entry.20 The lxc.cgroup2.devices.allow key is used to control the permissions that the container has to create and interact with device nodes. For the Google Coral TPU, a typical entry might look like lxc.cgroup2.devices.allow: c 120:0 rwm, assuming that the major device number for the apex device is 120 (this can be confirmed on the host using ls -l /dev/apex_0). The c signifies a character device, 120:0 represents the major and minor device numbers, and rwm grants read, write, and mknod permissions to the container.26 For USB-based Coral TPUs, the major device number would likely be different (e.g., 189), and the configuration might involve allowing access to a range of minor device numbers associated with USB devices using a wildcard (e.g., lxc.cgroup2.devices.allow: c 189:* rwm).26 The lxc.mount.entry key is then used to bind-mount the device node from the host’s /dev directory into the container’s /dev directory, making it accessible to applications running inside the container. A typical entry for the Coral TPU would be lxc.mount.entry: /dev/apex_0 dev/apex_0 none bind,optional,create=file.20 The bind option creates a link to the host’s device, and create=file ensures that the device file is created within the container if it does not already exist.

Finally, it’s important to consider whether to run the LXC container in a privileged or unprivileged mode.1 Privileged containers, created by unchecking the “unprivileged container” option during creation in the Proxmox web interface, offer less security isolation between the container and the host but might simplify access to certain hardware resources. Unprivileged containers, while generally more secure, require more careful configuration to grant the necessary permissions for device access, potentially involving the creation of custom udev rules on the host to manage device ownership and permissions persistently.20

The ability of LXC containers to access PCI devices in Proxmox is fundamentally dependent on the host kernel’s ability to recognize and manage these devices through its drivers. If the host system does not have the correct drivers installed or if they are not functioning properly, the LXC containers will not be able to access the Google Coral TPU, regardless of the container’s configuration. Furthermore, the precise configuration of cgroup2 device permissions and mount entries in the LXC configuration file is critical for granting the container the necessary rights to interact with the TPU’s device node. Incorrect or insufficient permissions will prevent applications within the container from communicating with the TPU. While the term “PCI passthrough” is commonly used in this context, it’s crucial to recognize that it is technically device node sharing, with the host kernel remaining in control. This distinction has implications, especially when considering the possibility of concurrent access from multiple independent containers.

3. Capabilities and Limitations of the Google Coral TPU

The Google Coral TPU is a specialized hardware accelerator designed to significantly speed up the inference of machine learning models, particularly those developed using TensorFlow Lite.7 It is an ASIC that provides high computational throughput with low power consumption, making it well-suited for edge computing applications like object detection in NVR systems. A key aspect of the Coral TPU’s architecture is its limited on-device static RAM (SRAM), which is used to store the parameters of the machine learning model being executed.33 This local memory access enables faster inference compared to fetching data from external system memory.

When considering the possibility of multiple independent processes or containers simultaneously accessing a single Google Coral TPU, research indicates a fundamental limitation: a single-core Coral TPU is designed to be communicated with and utilized by only one process at a time.34 The TPU loads the machine learning model into its on-device memory, and attempting to run inference from multiple processes concurrently would likely lead to conflicts in managing this memory and the TPU’s processing pipeline. Furthermore, the on-board RAM of the Coral TPU has a limited capacity, typically sufficient for only one model at a time.33 If multiple processes required different models, or even the same model with different configurations, the TPU would need to constantly swap model data in and out of its memory. This process introduces significant latency and would severely degrade the overall inference performance, effectively negating the benefits of using the dedicated hardware. One user facing this issue was advised to implement a client-server architecture where a single process manages the TPU and handles inference requests from multiple clients in a serialized manner, further suggesting that direct simultaneous access is not a supported or efficient mode of operation.36

However, there is a significant exception to this single-process limitation: the Google Coral M.2 Accelerator with Dual Edge TPU (typically an E-key card).10 This specialized card incorporates two independent Coral TPU cores on a single physical module, with each core having its own dedicated PCIe interface. In this specific case, it becomes theoretically possible to dedicate one TPU core to Frigate running in one LXC container and the other TPU core to Scrypted running in the second LXC container. This requires that the Proxmox host’s motherboard supports two independent PCIe lanes on the M.2 E-key slot 10 and that the software applications (Frigate and Scrypted) can be configured to target these individual TPU cores.

It’s also worth noting the considerations for the Google Coral USB Accelerator.3 While it might seem like a simpler device to pass through to LXC containers, it still operates under the same fundamental single-process limitation within its driver. Additionally, when dealing with multiple high-demand applications like Frigate and Scrypted, the bandwidth limitations of the USB bus itself could become a significant performance bottleneck.

The inherent design of the single-core Google Coral TPU, optimized for efficient execution of a single machine learning model, restricts its ability to handle concurrent inference requests from multiple independent processes effectively. The limited on-board memory and the single inference pipeline are key factors contributing to this limitation. The introduction of the Dual Edge TPU model indicates a recognition of the need for parallel processing capabilities in edge AI applications, offering a potential solution for simultaneous tasks by providing two independent processing units. However, the successful utilization of both cores depends on both hardware compatibility and the software’s ability to target each core individually. Even if the operating system and virtualization technology allowed multiple containers to access the same single-core TPU concurrently, the underlying driver and hardware would likely serialize the requests, preventing true simultaneous processing and potentially leading to performance bottlenecks.

The following table summarizes the different Google Coral TPU models and their implications for concurrent access:

| Model | Interface | Number of TPUs | Potential for Simultaneous Access | Notes | Snippet IDs |

| M.2 Accelerator A+E key | M.2 (A+E key) | 1 | No | Single Edge TPU, single PCIe x1 lane. | 8 |

| M.2 Accelerator B+M key | M.2 (B+M key) | 1 | No | Single Edge TPU, single PCIe x1 lane. | 8 |

| M.2 Accelerator with Dual Edge TPU (E key) | M.2 (E key) | 2 | Potentially (with caveats) | Two independent Edge TPUs, each with a PCIe Gen2 x1 lane. Motherboard must support dual lanes on the E-key slot. Software needs to be able to target each TPU. | 8 |

| USB Accelerator | USB | 1 | No | Single Edge TPU, limited by USB bandwidth for multiple high-demand applications. | 3 |

4. Frigate and Scrypted NVR with Google Coral TPU

Frigate NVR is commonly configured to utilize the Google Coral TPU for object detection by specifying the edgetpu detector type in its config.yml file.6 The device attribute within the detector configuration can be set to pci for PCIe or M.2 connected TPUs or usb for USB-based versions. Frigate also provides the capability to specify multiple Coral TPUs using indices like pci:0 and pci:1 or usb:0 and usb:1 6, which suggests a potential mechanism for targeting individual cores of a Dual Edge TPU.

Scrypted NVR also supports integration with the Google Coral TPU for accelerated object detection.5 The process typically involves installing the necessary drivers on the Proxmox host and then configuring Scrypted to access the TPU, potentially by passing through the USB or PCI device to the Scrypted container.

Given the single-process limitation of the single-core Google Coral TPU, attempting to configure both Frigate and Scrypted to use the same /dev/apex_0 device node would very likely lead to conflicts.43 It is highly probable that only one of the applications would be able to successfully initialize and utilize the TPU at any given time, resulting in errors or the other application failing to detect the accelerator.

Both Frigate and Scrypted are designed to have exclusive control over the Coral TPU for their object detection tasks. Their internal operations, such as model loading and inference request management, are not built to coordinate access to a single TPU instance with other independent applications. The configuration options within both software packages for specifying the Coral device are geared towards selecting a specific TPU for exclusive use by that particular application, without providing built-in mechanisms for sharing access.

5. Addressing Simultaneous Access: Feasibility and Considerations

Based on the analysis of the research material, simultaneous access to a single-core m.2 PCI Google Coral TPU by Frigate and Scrypted NVR running in separate LXC containers on Proxmox is generally not feasible.34 While Proxmox might allow both containers to be granted access to the /dev/apex_0 device node, the Coral TPU hardware itself can only process requests from one process at a time. Attempting to use it concurrently will likely result in one or both applications failing to initialize the TPU or encountering errors during inference.43

The notable exception to this limitation is the Google Coral M.2 Accelerator with Dual Edge TPU (typically an E-key card).10 If the user has this specific model and their Proxmox host’s motherboard supports the two independent PCIe lanes on the M.2 E-key slot 10, there is a theoretical possibility of configuring each LXC container to utilize one of the two independent TPU cores. This would likely involve identifying the distinct device nodes associated with each core (which might be /dev/apex_0 and /dev/apex_1, but this needs verification) and then configuring Frigate and Scrypted to target these individual device nodes. Frigate’s configuration using device: pci:0 and device: pci:1 6 suggests a potential way to achieve this, but the research does not provide explicit guidance on configuring Scrypted for a dual-TPU setup.

The limitation of single-process access is inherent to the single-core Google Coral TPU hardware and its driver design. The Dual Edge TPU model was introduced to address the need for parallel processing, but its successful utilization for simultaneous access in separate LXCs depends on both the motherboard’s capabilities and the software’s ability to target each TPU core.

6. Potential Workarounds and Alternative Solutions

For users seeking to leverage Google Coral TPU acceleration for both Frigate and Scrypted NVR within a Proxmox environment, several potential workarounds and alternative solutions can be considered:

If the user’s m.2 PCI Coral TPU is a Dual Edge TPU model (E-key), and their motherboard supports dual PCIe lanes on the M.2 slot, they should investigate how the two TPU cores are exposed to the Proxmox host. If two device nodes (e.g., /dev/apex_0 and /dev/apex_1) are present, they could attempt to configure Frigate to use one (e.g., device: pci:0) and Scrypted to use the other (e.g., device: pci:1 or a similar specific configuration if Scrypted supports it).

The most straightforward solution to ensure dedicated acceleration for both applications is to use separate Google Coral TPUs. This would require the Proxmox server to have multiple compatible expansion slots. The user could install one m.2 PCI Coral TPU for Frigate and another for Scrypted, or a combination of an m.2 card and a USB Coral Accelerator if slots are limited. For separate m.2 cards, the configuration in Frigate might use device: pci:0 and in Scrypted, if it also uses PCI, it might be configured for device: pci:1 (assuming the host recognizes them as such). For a USB Coral, Scrypted would need to be configured to access the USB device, potentially by its bus and device ID.

As an alternative, the user could consider using their existing m.2 PCI Coral TPU for one application (perhaps Frigate, due to potentially higher demand) and adding a Google Coral USB Accelerator for the other application (Scrypted). This would necessitate installing the USB drivers on the Proxmox host and configuring Scrypted to access the USB device, typically by its bus and device ID. Frigate’s configuration would remain focused on the PCI device node. However, potential USB bandwidth limitations should be kept in mind.

Finally, the user could explore alternative hardware acceleration methods supported by Frigate and Scrypted. Both applications might be able to utilize the Proxmox host’s integrated GPU (iGPU) for video decoding, and potentially even for some aspects of object detection, although the Coral TPU is generally more efficient for the latter. Investigating the software’s documentation for options like OpenVINO (for Intel iGPUs) or other hardware acceleration frameworks might provide a fallback or supplementary solution.

The optimal solution will depend on the user’s current hardware configuration, the availability of expansion slots, and their budget for additional hardware. Using separate TPUs offers the best performance and isolation but comes with increased hardware costs.

7. Performance Implications and Recommendations

Attempting to force simultaneous access to a single-core Google Coral TPU would likely result in significant performance degradation for both Frigate and Scrypted due to resource contention and the need for frequent model swapping.33 This could lead to missed detections, increased latency, and potential system instability.

Based on the analysis, the following recommendations are provided:

- Check the Coral TPU Model: Determine the exact model of the m.2 PCI Coral TPU. If it’s a Dual Edge TPU (E-key), thoroughly investigate configuring each LXC to use a separate core.

- Consider Separate TPUs: If a single-core TPU is being used, or if utilizing both cores of a Dual Edge TPU proves difficult, the recommended approach is to use separate Google Coral TPUs if the Proxmox server has sufficient expansion capabilities.

- Prioritize One Application: If using a single TPU is the only option, consider prioritizing one application (e.g., the one with more critical detection needs) to have exclusive access to the TPU.

- Monitor Performance: Closely monitor the performance of both Frigate and Scrypted (e.g., detection rates, CPU usage, latency) if any form of shared access is attempted, especially with a Dual Edge TPU.

To test their specific setup, the user should:

- Configure one LXC (e.g., Frigate) to use the Coral TPU and verify it’s working correctly.

- Then, configure the second LXC (Scrypted) to use the same TPU and observe if it can also initialize and perform detections without errors in the first LXC. Monitor the logs of both applications for TPU-related errors.

- If using a Dual Edge TPU, identify if two

/dev/apex_*devices appear on the host and attempt to configure each LXC to use one.

Forcing simultaneous access to a single-core TPU is likely to be less effective than simply using CPU-based detection for one of the applications due to the overhead and limitations of the hardware.

8. Conclusion

In conclusion, simultaneous access to a single-core m.2 PCI Google Coral TPU by Frigate and Scrypted NVR running in separate LXC containers on Proxmox is generally not feasible due to the TPU’s design for single-process operation. The most viable solutions involve either utilizing a Google Coral M.2 Accelerator with Dual Edge TPU (if hardware and software support allows) or employing separate Google Coral TPUs for each LXC container. Prioritizing one application for exclusive TPU access or exploring alternative hardware acceleration methods are also options if simultaneous Coral TPU use is not achievable. Regardless of the chosen approach, thorough testing and continuous monitoring of the system’s performance and stability are crucial to ensure optimal operation.

Now that’s an answer! How many hours of search would have been necessary on traditional use of web-searches? I suspect A LOT! Now I only have to make a mental note to myself: Stop wasting time on traditional Google searches. Jump directly to the best (latest) free AI model. Google Gemini seems to be the current one at the time of writing. I’m sure it will be another one in a couple of weeks/months.